Self-Hosted GitHub Actions Runner on Repurposed Laptop

I've been working on the Kodelet project, a Go-based coding agent that needs to be compiled into single static binaries for different platforms including Linux, macOS, Windows, and architectures including ARM64 and AMD64. To ensure reproducible builds, I've been running go cross-compilation inside Docker container.

GitHub Actions provides 4 cores and 16GB of RAM on their standard runners for public repositories. For a large Go project with multiple target platforms, compilation was taking up to 5 minutes. While not terrible, when you're iterating quickly during active development, those minutes add up.

Meanwhile, I had a Thinkpad T14 AMD laptop gathering dust since 2022. This machine has 8 cores, 16 threads (AMD Ryzen 7 PRO 4750U) and 32GB of RAM - significantly more powerful than GitHub's hosted runners. A couple of months ago, I had already repurposed it into a lab server running Ubuntu 24.04 and k3s, mainly using it as a Tailscale exit node for accessing my home network and UK region-locked services from overseas without getting bombarded with captchas.

To scratch the itch of optimising my build times and making better use of this hardware, I decided to set up a self-hosted GitHub Actions runner on this laptop.

Kubernetes-Based Runner Architecture

Instead of running GitHub Actions runners directly on the host, I deployed them on the existing k3s cluster using Actions Runner Controller (ARC). This approach provides better isolation, better reproducibility, better level of automation, easier scaling, and integration with the existing Kubernetes infrastructure.

The GitHub Actions Terraform Module

The setup is managed through Terraform. I created a reusable module that handles the entire deployment. Here's the core of the module implementation:

# modules/github-actions/variables.tf

variable "controller_namespace" {

description = "Namespace for the Actions Runner Controller"

type = string

default = "arc-systems"

}

variable "controller_release_name" {

description = "Helm release name for the Actions Runner Controller"

type = string

default = "arc"

}

variable "metrics_enabled" {

description = "Enable metrics collection from the controller"

type = bool

default = false

}

variable "log_level" {

description = "Log level for the controller (debug, info, warn, error)"

type = string

default = "info"

}

variable "log_format" {

description = "Log format for the controller (text, json)"

type = string

default = "text"

}

variable "dind_mtu" {

description = "MTU for Docker-in-Docker networking"

type = number

default = 1500

}

variable "controller_resources" {

description = "Resource requests and limits for the controller pod"

type = object({

requests = optional(map(string), {})

limits = optional(map(string), {})

})

default = {}

}

variable "create_runner_secret_store" {

description = "Create external secret store and secret for GitHub runner token"

type = bool

default = false

}

variable "gcp_project_id" {

description = "GCP project ID for Secret Manager access"

type = string

default = ""

}

variable "github_secret_name" {

description = "Name of the GitHub token secret in Google Secret Manager"

type = string

default = "github-actions-runner-secret"

}

variable "runners_namespace" {

description = "Namespace for runner scale sets"

type = string

default = "arc-runners"

}

variable "runner_scale_sets" {

description = "Map of runner scale sets to create (one per repository)"

type = map(object({

installation_name = string

github_config_url = string

github_secret_name = optional(string, "github-runner-token")

min_runners = optional(number, 0)

max_runners = optional(number, 5)

runner_image = optional(string, "ghcr.io/actions/actions-runner:latest")

runner_resources = optional(object({

requests = optional(map(string), {})

limits = optional(map(string), {})

}), {})

labels = optional(map(string), {})

}))

default = {}

}

# modules/github-actions/main.tf

# Install Actions Runner Controller using Helm

resource "helm_release" "arc_controller" {

name = var.controller_release_name

namespace = kubernetes_namespace.arc_systems.metadata[0].name

create_namespace = false

chart = "oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set-controller"

version = var.controller_chart_version

values = [

yamlencode({

metrics = var.metrics_enabled ? {

controllerManagerAddr = ":8080"

listenerAddr = ":8080"

listenerEndpoint = "/metrics"

} : null

flags = {

logLevel = var.log_level

logFormat = var.log_format

}

})

]

}

# Runner scale sets - one per repository with Docker-in-Docker template

resource "helm_release" "runner_scale_sets" {

for_each = var.runner_scale_sets

name = each.value.installation_name

namespace = kubernetes_namespace.arc_runners[0].metadata[0].name

chart = "oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set"

values = [

yamlencode({

githubConfigUrl = each.value.github_config_url

githubConfigSecret = var.create_runner_secret_store ? "github-runner-token" : each.value.github_secret_name

minRunners = each.value.min_runners

maxRunners = each.value.max_runners

# Docker-in-Docker template configuration

template = {

spec = {

initContainers = [

{

name = "init-dind-externals"

image = "ghcr.io/actions/actions-runner:latest"

command = ["cp", "-r", "/home/runner/externals/.", "/home/runner/tmpDir/"]

volumeMounts = [{

name = "dind-externals"

mountPath = "/home/runner/tmpDir"

}]

},

{

name = "dind"

image = "docker:dind"

args = [

"dockerd",

"--host=unix:///var/run/docker.sock",

"--group=$(DOCKER_GROUP_GID)",

"--mtu=${var.dind_mtu}"

]

securityContext = { privileged = true }

# ... additional dind configuration

}

]

containers = [{

name = "runner"

image = each.value.runner_image

command = ["/home/runner/run.sh"]

env = [

{ name = "DOCKER_HOST", value = "unix:///var/run/docker.sock" },

{ name = "RUNNER_WAIT_FOR_DOCKER_IN_SECONDS", value = "120" }

]

}]

}

}

})

]

}

# modules/github-actions/outputs.tf

output "controller_namespace" {

description = "The namespace where the Actions Runner Controller is deployed"

value = kubernetes_namespace.arc_systems.metadata[0].name

}

output "controller_release_name" {

description = "The Helm release name for the Actions Runner Controller"

value = helm_release.arc_controller.name

}

output "controller_status" {

description = "Status of the Actions Runner Controller Helm release"

value = helm_release.arc_controller.status

}

output "runners_namespace" {

description = "The namespace where runner scale sets are deployed"

value = length(var.runner_scale_sets) > 0 ? kubernetes_namespace.arc_runners[0].metadata[0].name : null

}

output "runner_scale_sets" {

description = "Information about deployed runner scale sets"

value = {

for k, v in helm_release.runner_scale_sets : k => {

name = v.name

namespace = v.namespace

status = v.status

config_url = var.runner_scale_sets[k].github_config_url

min_runners = var.runner_scale_sets[k].min_runners

max_runners = var.runner_scale_sets[k].max_runners

}

}

}

output "github_runner_secret_store_created" {

description = "Whether the external secret store was created"

value = var.create_runner_secret_store

}

Using the Module in lab/main.tf

Here's how the module is configured in my lab setup:

module "github_actions" {

source = "../modules/github-actions"

# Basic configuration

controller_namespace = "arc-systems"

controller_release_name = "arc"

# Enable metrics for monitoring

metrics_enabled = true

# Configure logging for lab environment

log_level = "info"

log_format = "json"

# Configure Docker-in-Docker MTU for lab environment

dind_mtu = 1230

# Resource management for lab cluster

controller_resources = {

requests = {

cpu = "100m"

memory = "128Mi"

}

limits = {

cpu = "200m"

memory = "256Mi"

}

}

# Conservative scaling for lab

runner_max_concurrent_reconciles = 1

# External Secrets Configuration

create_runner_secret_store = true

gcp_project_id = local.project_id

github_secret_name = "github-actions-runner-secret"

# Runner Scale Sets

runners_namespace = "arc-runners"

}

### Repository-Specific Runner Scale Sets

Each repository gets its own runner scale set with tailored resource allocations. The Kodelet project, being the most resource-intensive, gets generous allocations:

```hcl

runner_scale_sets = {

"kodelet" = {

installation_name = "kodelet-runner"

github_config_url = "https://github.com/jingkaihe/kodelet"

# Scaling configuration

min_runners = 0

max_runners = 5

# Resource allocation

runner_resources = {

requests = {

cpu = "2000m"

memory = "4Gi"

}

limits = {

memory = "8Gi"

}

}

# Labels for organisation

labels = {

"repository" = "kodelet"

"type" = "application"

}

}

# Additional repositories with lighter resource requirements...

}

The MTU Challenge

One interesting challenge was networking within the Docker-in-Docker (dind) setup. The default Docker bridge network MTU didn't match the pod CNI MTU, causing bizarre connectivity issues. Google.com and Debian repositories were reachable, but github.com connections would hang. This turned out to be a classic MTU mismatch problem.

The key was configuring the dind container to use a custom MTU value that matches the pod CNI MTU:

# Configure Docker-in-Docker MTU for lab environment

dind_mtu = 1230

In the module, this gets passed to the Docker daemon:

args = [

"dockerd",

"--host=unix:///var/run/docker.sock",

"--group=$(DOCKER_GROUP_GID)",

"--mtu=${var.dind_mtu}",

"--default-network-opt=bridge=com.docker.network.driver.mtu=${var.dind_mtu}"

]

This simple configuration change eliminated all the janky networking issues. This is something I've been keep reminding myself: When you running into network issue, always first have a look at DNS and then MTU :)

GitHub Authentication

For authentication, I use a classic Personal Access Token (PAT) with repo access. Since all repositories are under my personal account, organisation-level permissions aren't needed. The token is stored in Google Secret Manager and accessed through External Secrets Operator:

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: github-runner-token

namespace: arc-runners

spec:

refreshInterval: 1h

secretStoreRef:

name: github-runner-secret-store

kind: SecretStore

target:

name: github-runner-token

data:

- secretKey: github_token

remoteRef:

key: github-actions-runner-secret

Deployment Details

After deployment, the ARC system creates several components in the arc-systems namespace:

- Controller: Manages the lifecycle of runner scale sets

- Listener: Watches for GitHub workflow jobs

- Runner Pods: Ephemeral pods created on-demand for each job

The runner pods use a comprehensive Docker-in-Docker template with init containers for setting up the environment, volume mounts for the Docker socket and workspace, and proper health checks to ensure the Docker daemon is ready before starting jobs.

Performance Improvements

The performance gains are substantial. Here's the before and after comparison for cross-platform compilation:

Before (GitHub Hosted Runner):

#18 0.106 Building for linux/amd64...

#18 33.50 Building for linux/arm64...

#18 65.95 Building for darwin/amd64...

#18 97.45 Building for darwin/arm64...

#18 128.8 Building for windows/amd64...

#18 161.1 Cross-build completed successfully!

After (Self-Hosted Runner):

#16 0.202 Building for linux/amd64...

#16 20.97 Building for linux/arm64...

#16 41.03 Building for darwin/amd64...

#16 59.47 Building for darwin/arm64...

#16 78.31 Building for windows/amd64...

#16 98.02 Cross-build completed successfully!

The build time dropped from 161 seconds to 98 seconds, a 60% improvement. While not an order of magnitude faster, it's a significant improvement that adds up over many builds.

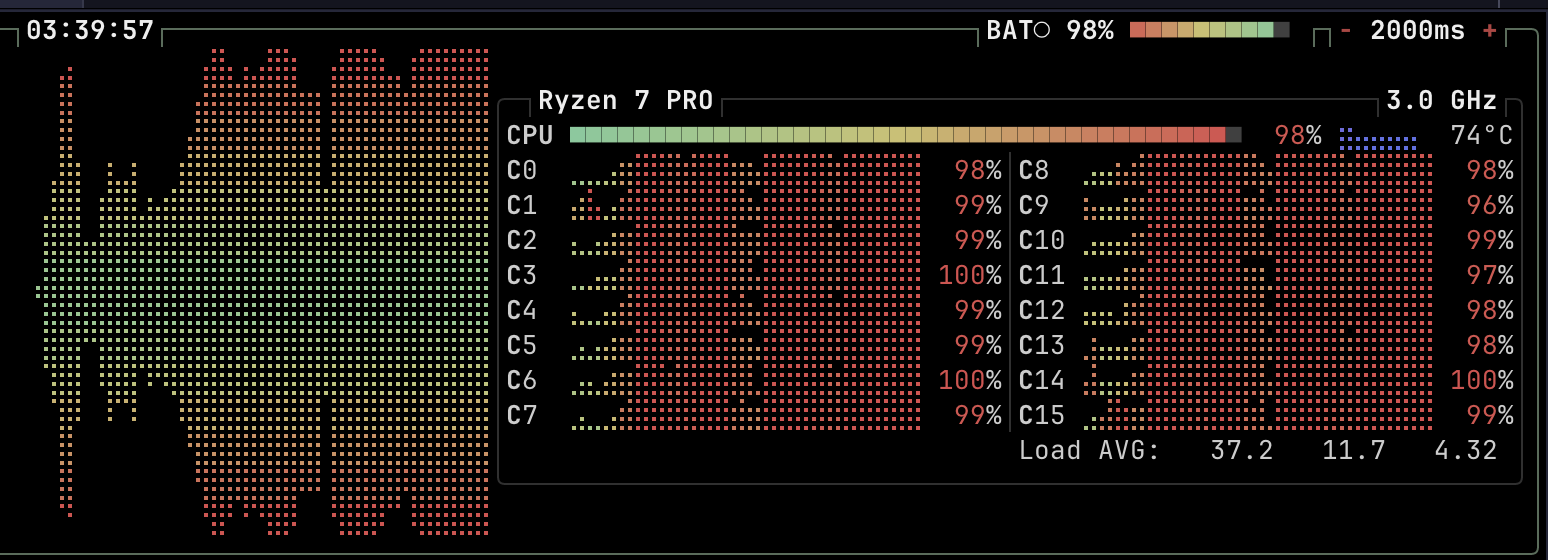

During compilation, the laptop's CPU utilization tells the whole story:

All 16 cores running at 96-100% utilization with a load average of 37.2, clearly putting the hardware to good use.

Security Considerations

Running Docker-in-Docker requires privileged mode, which isn't ideal from a security perspective. This setup isn't suitable for untrusted code or multi-tenant workloads. However, as the sole user of this runner, the security boundaries are acceptable for my use case. For additional sandbox isolation, gVisor could be implemented, but that's a project for another day.

Lessons Learned

Several key insights emerged from this project:

Performance: Self-hosting on underutilized hardware often outperforms hosted runners, especially for CPU-intensive tasks like compilation.

Modern Infrastructure: Tools like k3s and Tailscale make it remarkably easy to repurpose old hardware into useful lab servers accessible from anywhere.

Hardware Capability: Even "old" workstations like the Thinkpad T14 AMD remain incredibly powerful for self-hosting workloads. The 16 CPU and 32GB RAM handle multiple concurrent builds without breaking a sweat.

Learning Value: Beyond the practical benefits, setting up self-hosted runners on Kubernetes provides valuable hands-on experience with container orchestration, networking, and CI/CD infrastructure.

Conclusion

Repurposing an unused laptop into a self-hosted GitHub Actions runner proved both educational and practical. The 60% performance improvement justifies the setup effort, and the infrastructure serves multiple purposes beyond just CI/CD. For developers with spare hardware and moderate workload requirements, self-hosting runners on Kubernetes offers a compelling alternative to cloud-based solutions.

The entire configuration is managed through Terraform, making it reproducible and maintainable. As workload requirements grow, the setup can easily scale by adding more nodes to the k3s cluster or adjusting resource allocations through the Terraform configuration.